Cognitive Dimensions for

API Evaluations

Background

During my tenure at Microsoft I implemented and facilitated the widespread use of the cognitive dimensions analysis to assess and improve the development of Application Programming Interfaces (APIs).

An API is a collection of functions that allows two applications to talk to each other. For a company like Microsoft with an extensive and diverse developer community it is vital and imperative to get them right the first time because once an API is shipped is very difficult to fix.

When assessing APIs, developers look at their object model (OM) design (i.e. function parameters and returns, class hierarchy, etc.), security threats and so on. However, it says next to nothing about the usability of the API. How could one generate design guidelines from a usability as well as an OM perspective? The distinction between the two types of guidelines is subtle, but crucial.

OM design guidelines suggest how to design from a good software development practice (e.g. ensure there is a default constructor, abide by the “garbage collection” design pattern, etc.), but it says nothing about how users will use the APIs or what do they expect to find in one. Furthermore, APIs are developed by different teams, but one user can use those APIs concomitantly in one scenario. What is needed is a framework that can guide and assess the API design as it’s developed.

Framework

Methodology

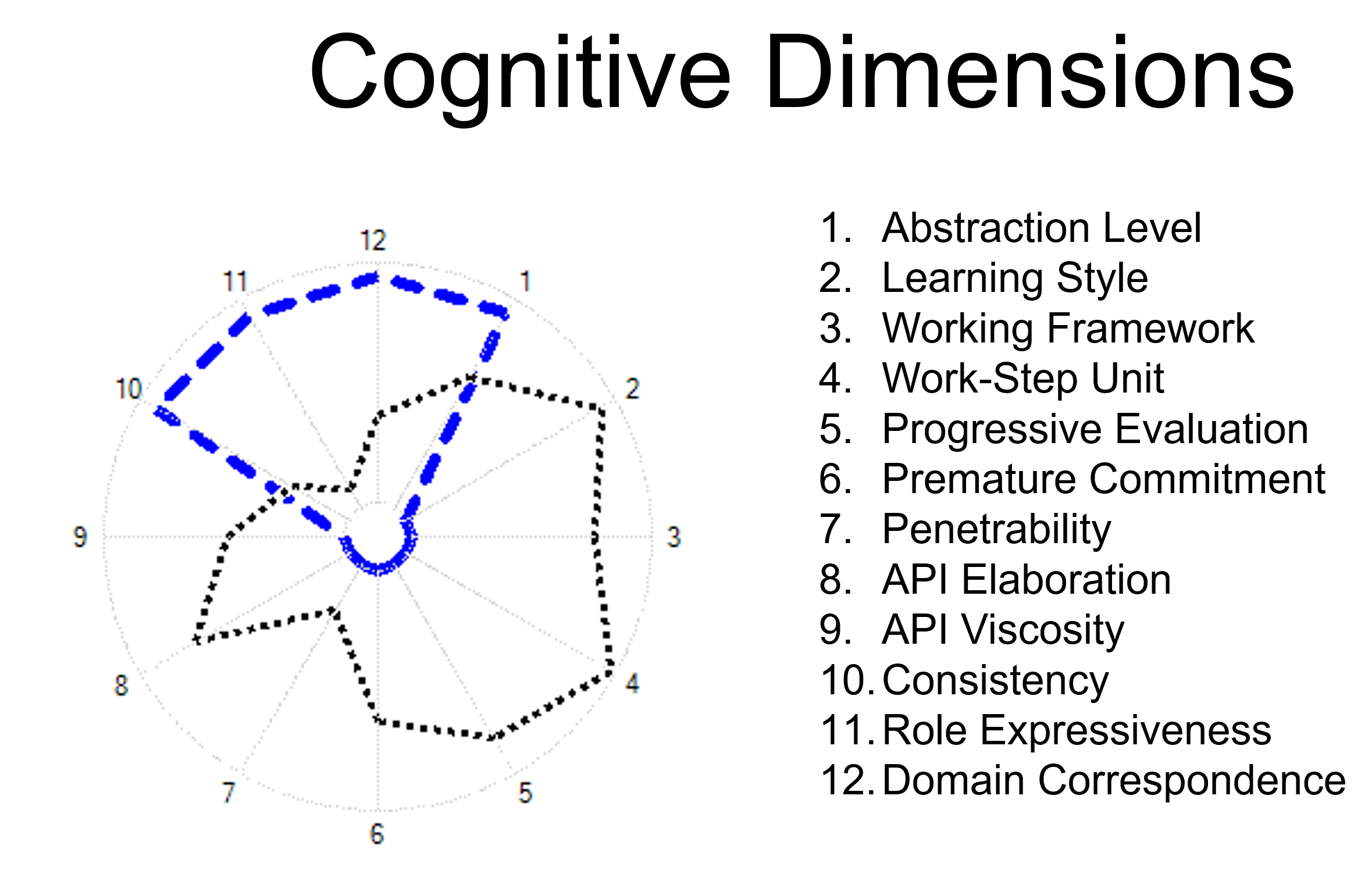

The framework uses a set of twelve predefined dimensions. Those dimensions are non-orthogonal and fluid and are represented on a spider graph. The values near the center of the graph are minima and those toward the edge are maxima values of a particular dimension.

My team conducted hundreds of qualitative interviews with different developers. From those interviews we identified three basic personas: systematic, pragmatic and opportunistic programmers. Subsequently, we plotted each persona onto the graph and used them as references during testing.

When analyzing a particular API, we gave developers a few programming tasks in the lab and observed them. Then we plotted the aggregate results onto the twelve dimensions and the target persona (in this example the opportunistic programmer) for whom we designed the API. By comparing the difference between the persona profile (in blue outline) and the aggregate data we could analyze and provide design suggestions for the API.

Impact

This new way of looking at APIs had an immediate and powerful impact at Microsoft. We were able to pinpoint clear causes in usage breakdown due to misaligned expectation and help the company avoid costly updates after the APIs had been shipped. Here are some of the results:

- Convinced the “transactions” team the API needed redesign and helped them do it within six weeks, which was record fast considering we were right before beta 2

- Discovered flaws in the tablet API and led the redesign effort over two rounds of testing

- Helped uncover a massive design flaw in the WinFx API and convinced the team to accept our input for redesign, accomplishing it in only eight weeks